[This will end up being quite long, so I will post it in three stages for easier digestion. Expect two updates during the week.

First update on generations added.]

OK, so the title is a bit ambitious, but I've finally found a way to measure this. Anyone who's read my personal blog knows that one of my obsessions is documenting what's going on among young people. They typically leave very little in terms of a written record, and as adults most people erase the memories of their adolescence -- to the benefit of their mental health -- and replace them with whatever accords with their grown-up worldview. For example, they may imagine adolescents as relatively innocent creatures, whereas their memories -- if unearthed -- would remind them of what an anarchic jungle secondary school was, in contrast to the much more tranquil social lives of adults.

So, the need for a clearer picture of young people's lives is great, yet clearly unmet. As luck would have it, The Harvard Crimson (the undergraduate newspaper) has placed all of its content online, stretching back to the paper's origin in 1873, and it is fully searchable. This allows me to search for some signal of the zeitgeist year by year and plot the strength of this signal over time. If you read my three-part series at GNXP on the changing intellectual climate, as judged by what appears in academic journals, the approach is the same. (Here are those articles:

Death of Marxism, etc.,

A follow-up, and

Popularity of science in studying humans.)

In short, I count the number of articles in a given year that have some keyword -- "Marxism," for example -- and then standardize this by dividing by a very common neutral word. This protects against seeing an imaginary trend that is simply due to the newspaper pumping out many more articles over time (or fewer in hard times). Ideally, I would standardize by using "the" since it appears in every article and therefore gives us the total number of articles, answering the question "What percent of all articles in that year contained this keyword?" Unfortunately, the Crimson search engine won't allow me to use "the," but it did let me search for "one," which is also a highly frequent word and will be a decent substitute. Before getting into the meat of the post, here is the number of articles found by searching for "one" across time:

We were correct to standardize somehow, given the huge increase since the early 1990s (probably reflecting the greater ease of desktop publishing with cheap computers and software).

A real improvement over the previous series that used JSTOR is that the Crimson archives run right up to the present, whereas JSTOR typically has a 5-year lag between the article's being published and being archived in JSTOR. The only limitation here is that we only see what's going on among upper-middle class young people, rather than all of them, but I'm mostly looking at events or topics that permeate our society, rather than academic fads as before. (Although I will probably look into that as well using the Crimson database.) So these youngsters ought to be fairly representative.

Moving on to the substance, I've put together graphs on three broad topics that are of great importance to young people. Adults also tend to worry about where the next generation stands on these topics. They are identity politics, religion, and generational awareness.

Identity politicsEveryone knows that the 1960s brought a sea change in college student culture, but I don't think anyone has presented a clear picture of how the concern with racism, sexism, etc. began or how it has changed over time to the present. In particular, most people (in my experience) over-estimate how prevalent identity politics were in the '60s, while forgetting how widespread the corresponding hysteria was in the early 1990s.

I began at the earliest date that the word appears or 1960, whichever came first, and end in 2008. The y-axis shows the count of articles with the keyword divided by the count of articles with "one." I chose the following identity politics keywords: "racism" or "racist," "sexism" or "sexist," "homophobia" or "homophobic," "date rape," and "hate speech." I also made a composite which sums all of them up. Here are the results:

"Racism" increases sharply through the '60s, peaks in 1970, and declines moderately through the '70s and '80s. However, starting in the late '80s, there's another surge which peaks in 1992. After the early '90s panic, though, the preoccupation with racism has declined substantially, so much so that it is now back down to its pre-late-'60s level. You'll recall how little widespread disruption there was in the wake of Hurricane Katrina, the Jena Six, and the Duke lacrosse hoax, despite every professional activist -- and even Kanye West on a televised awards show -- struggling to whip up society into another revolution. But 2006 had none of the racial hysteria to fuel it that 1992 did, so it was impossible to spark another round of race riots in L.A.

As for "sexism," it only begins in 1970. Many people who weren't alive back then don't remember how little of a role women's liberation played in The Sixties. But I used to be a radical activist in my naive college days, and Z Magazine operator Michael Albert emphasized that second-wave feminism was largely a delayed response to the perceived sexism of the anti-war, civil rights, and anti-capitalist movements that formed the basis of the counter-culture's concerns. Although there is not much change during the '70s and '80s, it got a pretty high jump initially, so it's not as though there were few adherents -- there just wasn't a massive increase. But once again, starting in 1988 there's a resurgence that peaks in 1992. This is when third-wave feminism was born, and it coincided with the resurgence of racial politics.

However, just as with race, panic about sex plummeted shortly afterward and is currently even lower than its initial level. Again, recall how few massive protests there were -- if any -- about the Duke lacrosse hoax, whose bogus premise was a bunch of jocks raping a stripper. Or for that matter, how pathetic the response was to the Larry Summers brou-ha-ha of early 2005. It surely generated some controversy among academics, but even that didn't last very long, and no one outside of academia gave a shit. In particular, the students didn't care -- influence from their leftist professors notwithstanding. If the zeitgeist lacks an obsession with sex roles, their feminist professors can preach all they want, but it will only fall on deaf ears.

Paying attention to homophobia only begins in 1977 -- 8 full years after the Stonewall Riots. And remember, that was in 1969, a ripe time for liberationist and revolutionary movements. This underscores the importance of using quantitative data in reconstructing history, since most people nowadays would imagine that back in the turbulent '60s, they were surely fighting against homophobia the way that their counterparts do now. Far from it. Back in the '60s, and even for most of the '70s and early '80s, campus radicals and liberals couldn't have cared less. Again, back then it was all about civil rights, stopping the imperialist war machine, and maybe smashing capitalism -- not about gay marriage or getting more women into science careers.

As we saw with "racism" and "sexism," "homophobia" saw a sharp rise in the late '80s and peaked in 1989 or '90, before dropping precipitously afterward. Currently it is more or less where it was before the late '80s surge. Liberals and young people may support gay marriage, but the larger meta-narrative of homophobia, as the activists would say, does not interest them.

Now we come to two more specific topics. "Date rape" basically tracks third-wave feminism, although there is an isolated occurrence in 1979 (which I exclude from the graph to keep the trend clear). Quite simply, there is rape and there is not-rape. "Date rape" was a term that tried to criminalize sex that wasn't rape, but where the girl regretted it or was unsure of what was happening. Probably the guy was stinking drunk too. Importantly, claims about "date rape" drugs later turned out to be bogus, as doctors in the UK pointed out -- most of the women claiming to have been slipped a "date rape drug" had no such drug in their system, although they typically did have lots of alcohol or other hard drugs present. It was essentially a witch-hunt or moral panic. By now, we know what to expect -- a sharp rise during the late '80s that peaks in 1992 and falls off a cliff shorter thereafter. Nowadays no one takes the idea seriously.

"Hate speech" shows roughly the same pattern, although it doesn't get started quite as early. It appears suddenly in 1990, peaks in 1991, and plummets right away. Aside from an anomalous jump in 2002 - '03 (which may reflect some event specific to Harvard), it has remained very low for nearly 15 years. Compared to college students during the heyday of Generation X back in 1991 - '92, young people today are more likely to view the concept of "hate speech" as a thinly veiled attempt to censor unpopular viewpoints.

It's interesting to note the differences in scale among the five topics. The "racism" scale is 3 times as great as those for "sexism," "homophobia," and "date rape," confirming what many others have observed -- that in the struggle for a bigger piece of the identity politics pie, race has trumped sex or sexuality. The scale for "hate speech" is about 3 times smaller than the previously mentioned three topics, perhaps because it is so specific, while "racism" pops up in many more contexts.

The composite identity politics index shows roughly the same pattern that we've noted before. There's a jump during the '60s which peaks in 1970 -- this only reflects a concern with racism. There is little or slightly negative growth during the '70s and '80s, but this was only the calm before the storm. In the late '80s, another wave of panics sweep through, and there is a sustained peak from 1989 through 1992. I have often pointed out in my personal blog and less often at GNXP that the peak of the social hysteria is 1991 - 1992, and this confirms that claim.

As with other epidemics, eventually it burns out, and obsessing over identity politics is now at or below its pre-late-'60s level. This overall pattern does not change even if I remove the two topics of "date rape" and "hate speech" that might seem to bias things toward producing an early '90s peak. Hard as it may be to believe for anyone younger than the Baby Boomers, identity politics was simply a very small piece of the radical chic calling back in the '60s -- it was about civil rights, anti-war, and capital.

Generational awarenessWe often speak of young people as an entire generation, but that only works when they have strong generational awareness or solidarity. Otherwise, they are merely a cohort. To give a personal example, I am too young to be part of Generation X, yet I'm a bit older than the Millennials. Almost no one my age (roughly mid-late 20s) is stuck in the culture of their adolescence and college years and never will be, in the way that many Baby Boomers are culturally stuck in 1968 and many Gen X-ers are stuck in 1992. It is possible to be a traitor to your generation if you're a Boomer or X-er, but since my cohort doesn't view itself as a Generation, the idea of defecting fails to make sense.

The same goes for the cohort born between roughly 1958 and 1964 -- their age-mates will not burn them at the stake for saying that they never liked disco music or punk rock, unlike a Boomer who said he didn't like the Beatles' later albums or a Gen X-er who said he always thought alternative rock was lame.

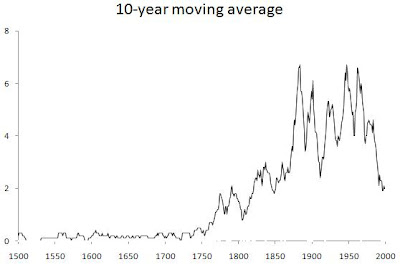

In any case, how do we quantify this sentiment? I simply searched the Crimson for "generation," which will turn up any time a member of a Generation writes about their age-mates as though they were a cohesive group, as well as when older people recognize the young people of today as being different and a cohesive group. I assume that its secondary use as a synonym for creation is roughly constant over time, so that changes reflect its primary use. The counts for the very early years are low, and the number of articles with "one" is also low, so I'm not so sure that the standardized measurement is telling us something real for that time period. Thus, along with the standardized graph, I've included one that shows just the counts. Here are the results:

The trendline is exponential, although other simple trends show roughly the same picture. It seems that during Gilded Age and Progressive Era, thinking in generational terms was not very common, although it does turn up toward the end of the Progressive Era. I'll get to my conjecture about this after surveying the other periods.

During the Roaring Twenties and through the Great Depression and WWII, the level is much greater than the historical trend and lasts very long. This may strike us as unexpected because we associate inter-generational conflict mostly with the late 1960s -- but if you thought young people were going crazy then, you should have seen them during the 1920s! Women started driving, smoking, swearing, and cutting their hair short like men -- take that, you dinosaurs! Jazz and later swing were just as much of a middle finger to older people as rock and roll was later on -- perhaps more so, given how much jazz broke with other forms of popular and classical music that formed the cultural background. Rock and roll, compared to its background, wasn't

so different. One central way by which young people mark themselves off from older people is by inventing their own slang, and they indeed made a bunch of it in the 1920s,

much of which is still with us today.

After WWII and through the first half of the 1960s -- the Golden Age of American Capitalism -- the level dips below the historical trend. The name "Silent Generation" was coined in 1951 to refer to young people at the time, and silent they were. If we must use "generation" instead of cohort, I prefer using distinct names for what I've been calling Generations -- Boomers, X-ers, etc. -- and numbered Silent Generations for everyone else (i.e., Silent Generation 1, 2, 3, ...). Of course, after them come the Baby Boomers, although surprisingly the late '60s level is not so far above the historical trend. Still, in absolute terms, it's as high as it was during the 1920s, even if it doesn't last as long.

There is a dip below trend for most of the 1980s, when Silent Generation 2 was in college. Then there's a spike in the early 1990s, reflecting Generation X finding its megaphone, to everyone's annoyance. It's hard to make out the end -- it looks mostly at the historical trend, perhaps with a recent jump. Sometime in the middle of the next decade, we'll be hit by another wave of social hysteria, and that will crystallize the young people then (say, age 16 to 24) into a new generation. We already have a name for them -- the Millennials -- but their generational self-awareness doesn't seem very deep to me right now. Voting for Obama has been the extent of them making us hear the voice of a new generation. But just wait.

I'm not too sure what causes the increasing historical trend -- maybe it just shows that the word is becoming more and more common to describe something we already talked about before, and hence the trend isn't interesting. Or it could mean that with an increasing pace of cultural change, we are able to pay better attention to generational changes, and we can therefore talk about them a lot more than before.

What's really interesting are the oscillations above and below the trend, which correspond pretty well to what we consider the heydays of Generations and Silent Generations, respectively. Trying to piece it all together, I think that generational awareness is low when times are prosperous and young people are small as a fraction of the population. This combination of good economic times and little competition means they can more easily establish themselves during early adulthood on their own. They don't feel gipped or embittered, so they aren't anti-establishment (which in practice means anti-elders). In contrast, when economic times are bad and there are a lot of young people competing to establish themselves, anxiety about the future sets in, many feel cheated -- they went to college and didn't end up making it rich -- and they have to band together to support each other.

This is really just a rough guess, and I don't have good data to present on the percent of the population that's 16 to 24, or what their average wages were, over this entire time period. And there are exceptions, of course. But looking at the whole thing, that's what I see.

Next update: religion, which will cover five topics and include a composite index.